Note: This article does not constitute financial advice. The ideas shared are exploratory and based on ongoing research and interest in the space.

The Challenge of Data

Information has always required effort to capture. Before digital systems, sorting data manually was normal. Today, the volume and speed of information make traditional methods obsolete. How much data is too much? It depends on what units you need to consume. Networks store and index data, making it accessible on demand. Blockchain records information openly and quickly, available to anyone.

As blocks became faster and networks more capable, developers faced a new challenge: they needed both real-time access and historical perspective. Real-time data allows acting first. Historical data allows analyzing the past and applying lessons strategically. In the early blockchain days, transactions could take minutes or even hours to finalize. Moving assets between networks was slow and cumbersome. Now, these operations happen in seconds. Hundreds of thousands of transactions occur every second, from trading to gaming events.

Raw data is validated, linked historically, and stored, but unprocessed. For projects tracking liquidity pools or specific game events, loading entire blocks is inefficient. Developers need filtered, structured streams focused on the events that matter. Speed gives an edge. Historical perspective gives depth. Together, they provide the foundation for real advantage.

How Filtering, Timing, and Analytics Create Advantage

A continuous flow of blockchain data can reach developers immediately, while filters ensure only relevant information is delivered. Watching a goal live instead of reading about it later illustrates the difference. Speed does not predict the future, but it provides a decisive advantage over competitors who receive delayed information.

The Moneyball story provides a clear lesson. In 2002, Oakland A’s had a small budget and could not buy top players. Scouts relied on obvious statistics, but managers focused on overlooked numbers. By filtering and analyzing the right data, they built a competitive team cheaply and won twenty games in a row. Success does not come from collecting all data, having the largest budget, or following obvious signals. It comes from the right data, structured and filtered for action.

In blockchain, structured real-time streams combined with historical data provide the same advantage. Developers and analysts can act instantly and use historical patterns to train AI models. Projects gain trust, efficiency, and strategic edge. Global demand for tokenization grows because investors want continuous access to markets. Capital will continue to flow into blockchain infrastructure, and projects relying on raw or slow data will fall behind.

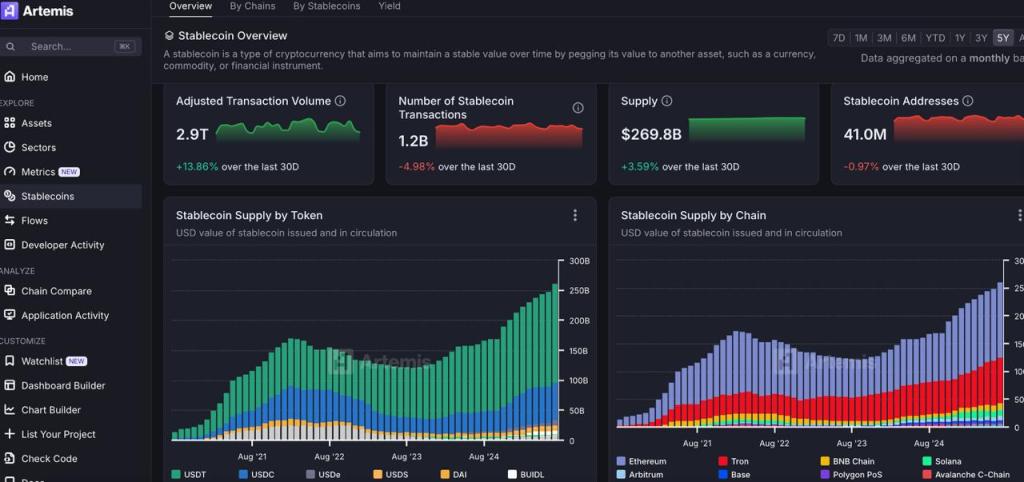

In addition to streams and filtering, analytical platforms now absorb specific data and convert it into aggregated insights and statistics. These platforms (Screenshots below illustrate it well) allow users to see trends, summarize complex flows, and make decisions based on filtered, structured information. They bridge the gap between raw blockchain data and actionable business intelligence. We will provide examples showing how different platforms collect, analyze, and visualize specific streams for broader statistical understanding.

Filtering data alone is not enough. It must reach the user at the right moment. Instead of querying static sources repeatedly, modern solutions push updates in real time. This allows monitoring a liquidity pool or a game event without processing entire blocks of irrelevant data. Public solutions exist, but deep data that provides competitive advantage may only be available through structured streams. Serious projects filter and deliver data at the right time to users, building trust and actionable insight.

Turning Data Into Strategic Power

From my own experience, working with multiple blockchain architectures and bridges, transactions that once took hours now finalize in seconds. Projects that rely on fast, filtered streams gain measurable advantage. Historical records allow reconstructing any event and provide depth for analytics and AI.

The combination of speed, focus, historical insight, and analytics turns raw blockchain data into strategic advantage. Success does not come from volume or visibility alone. It comes from choosing the right data, processing it intelligently, and delivering it when it matters most. Projects that understand this principle act faster, make better decisions, and create advantages that competitors relying on delayed or unfiltered data cannot match.

Over the next decade, the money circulation in blockchain will grow. Those who master data streams, filtering, historical insight, and analytical visualization will become the winners. Understanding how to combine real-time access with historical depth, structured filtering, and analytics is no longer optional. It is the difference between reacting late to opportunities and shaping them before anyone else.

We already applied this in practice. Together with my team, we delivered indexers and built firehose with substreams for high-usage onchain products. This gave users the cleanest information possible and proved that demand for structured, real-time data is only rising. Every product that cares about its data will need this approach, and we know how to make it work effectively.